Introduction

This document gives a basic tutorial on how to work with structural MRI data stored in NIfTI files. The first half gives a recap of the theory behind MRI machines and what kind of data we are actually working with. The second half is about visualizing neuroimaging data and manipulating it to draw correlation between disease severity and pathological manifestation (more specifically lesion size), by using the dataset including people with post-stroke aphasia. Hope you find it interesting and this tutorial inspires you to explore the topic more! ☺️

Before we begin: A little recap on MRI

To start working with neuroimaging, it’s important to understand the basics of MRI and what the images we see actually represent. The following text is based on Berger’s explanation and Azhar & Chong’s paper on MRI imaging (Azhar & Chong, 2023; Magnetic Resonance Imaging - PMC, n.d.). Feel free to check these out if something remains unclear!

Magnetic Resonance Imaging (MRI) is a technique used to visualize the internal structures of the body, and in our case, the brain. What we’re trying to do is quantify the properties of different tissues, such as gray matter, white matter, cerebrospinal fluid (CSF), or lesions. To achieve this, we use weighted MRI images. These images don’t have absolute or objective brightness values; instead, their intensities are relative and depend on how the MRI sequence is set up. By using several types of weighted images, we can extract meaningful information.

MRI works because water is magnetic, and our bodies are about 60% water. Each water molecule has two hydrogen atoms, and each hydrogen atom has a single proton at its nucleus, surrounded by one electron. These protons have a property called “spin” which gives a tiny magnetic field – like a miniature bar magnet.

Under normal conditions, the magnetic fields of these protons are randomly oriented. But when someone is placed in the strong magnetic field of an MRI scanner, more of these protons align with or against the field. The protons that align with the magnetic field are said to be in a “low-energy state”, while the protons that align against the magnetic field are said to be in a “high-energy state”. However, there is an imbalance, as more protons are in the low-energy state (because this is more energetically favorable), so the magnetic fields of the protons aligned with the field don’t get completely cancelled out by the protons aligned against it. What is left, creates a net magnetization vector in the direction of the MRI scanner’s magnetic field. This is what the MRI uses as a “starting point”.

The MRI scanner then uses a technique called resonance to gather information. It sends out radiofrequency (RF) pulses to the low-energy protons, causing them to flip to the high-energy state. When the RF pulse is turned off, the protons gradually return to their original alignment. As they do, they release a tiny amount of electromagnetic energy, which is picked up by RF coils placed around the body. These signals differ based on the type of tissue, the amount of water or fat it contains, and how the molecules behave. Stronger signals are associated with tissues that have more hydrogen atoms, like in areas of edema or inflammation, while the timing of the signal tells us about the environment and state of the tissue.

Two important measurements in MRI are T1 and T2 relaxation times, which create contrast in the MRI image:

-

T1 relaxation – How quickly protons realign with the magnetic field

-

T2 relaxation – How quickly protons fall out of sync (lose phase coherence) with each other after the RF pulse, when protons are spinning together in coherence

These two properties are used to generate T1-weighted and T2-weighted images, which emphasize different tissue characteristics.

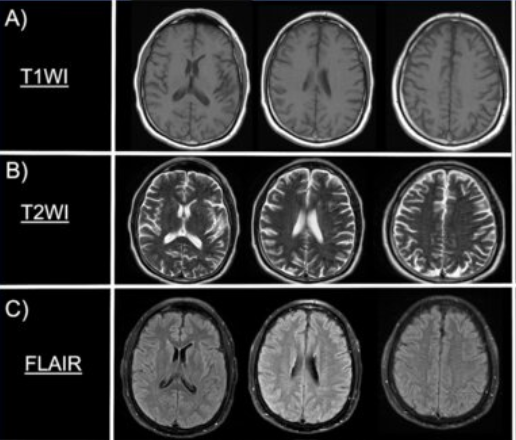

T1-weighted imagesprovide a clear picture of brain anatomy and is excellent for structural imaging. When a contrast agent is used, active or inflamed lesions can become more visible. On T1 images, fat and suba-cute blood typically appear bright, while fluids like CSF, edema, and lesions appear dark. You can think of T1 like viewing the brain under regular lighting—it shows you structure and clarity.

T2-weighted images aremore sensitive to water content and is particularly good for spotting fluid, inflammation, or other pathological changes. In T2 images, things like CSF, edema, cysts, and lesions appear bright, while fat, calcifications, and bone tend to appear dark. You might imagine T2 as looking at the brain under blacklight—suddenly all the ‘wet’ or abnormal areas light up.

Sometimes, T2 images are modified using a sequence called FLAIR (Fluid-Attenuated Inversion Recovery). CSF appears very bright on T2, which can make it hard to see subtle lesions near the ventricles or cortex. FLAIR solves this by suppressing the CSF signal, allowing small lesions to stand out while maintaining the T2 sensitivity of surrounding tissues. This makes FLAIR ideal for spotting MS plaques, small metastases, or cortical strokes. On FLAIR images, edema, demyelination, tumors, and strokes appear bright, while normal CSF appears dark—like turning off the glow from water to focus on hidden areas.

See Figure 1. for a visual representation on how T1, T2 and FLAIR images appear from an MRI scan.

There are of course also other types of sequences, but in this tutorial we will be mainly focusing on structural MRI and more specifically the T1 and T2 weighted images.

Note: the picture was taken from: Rodriguez-Hernandez A, Babici D, Campbell M, Carranza-Reneteria O, Hammond T. Hypoglycemic hemineglect a stroke mimic. eNeurologicalSci. 2023 Jan 17;30:100444.

Now that we have the basics, let’s try to understand what kind of data is actually portrayed on these MRI scans and what we can do with them.

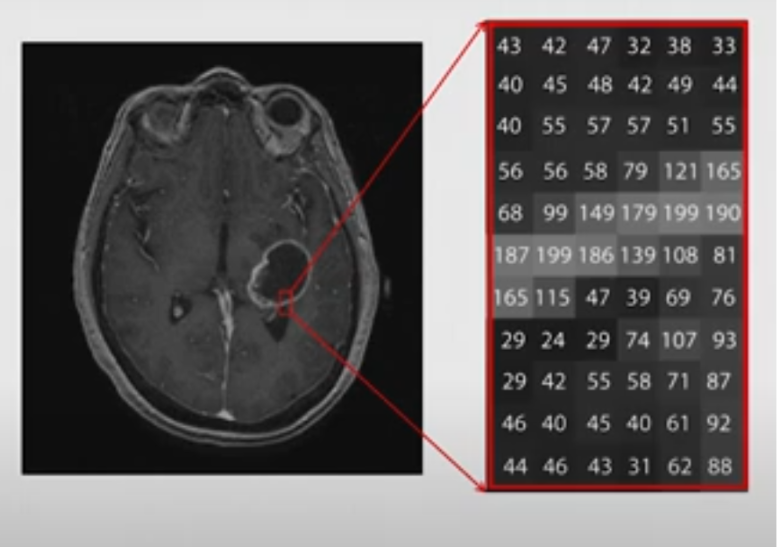

An MRI picture is like a 3D map of our brain, which is built from approx. 7 million of tiny building blocks, called voxels. A voxel is a 3D pixel. It’s a little cube of brain tissue, and the MRI machine measures how water behaves in it and assigns it a number. That number becomes the shade of gray in the picture. Dark areas have low numbers (less signal), while bright areas have high numbers (more signal).

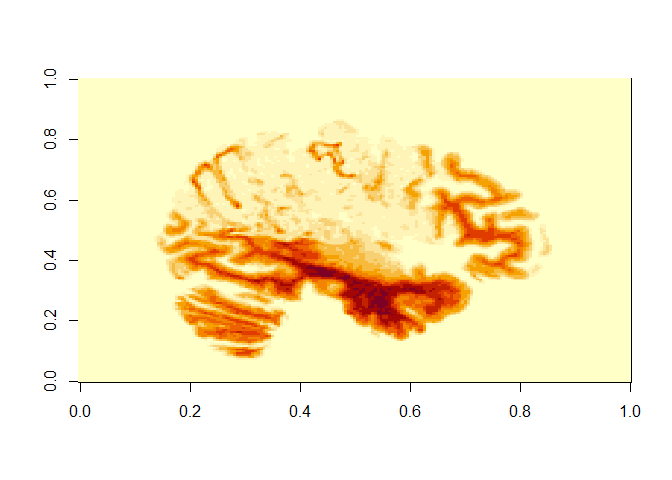

However, whether something appears bright or dark depends on the contrast type (so if it’s T1 or T2 for example). What changes between T1 and T2 is what kind of tissue gives off a strong or weak signal. For example: in T1 the CSF appears dark, meaning it has a lower signal, and a lower number assigned. On the other hand, in the T2 the CSF appears bright, meaning it has a higher signal, and a higher number assigned. See Figure 2. of a single, axial slice of the brain, showing how to imagine the portrayal of the “data” on these MRI pictures. Although, here a single slice is presented for better understanding, keep in mind that we usually look at the whole of the brain, which is made up of many-many different slices.

Note: this picture was taken from the video: Elizabeth Sweeney - Neuroimaging Analysis in R [Internet]. 2019 [cited 2025 Apr 18]. Available from: https://www.youtube.com/watch?v=9HkEq01nrco

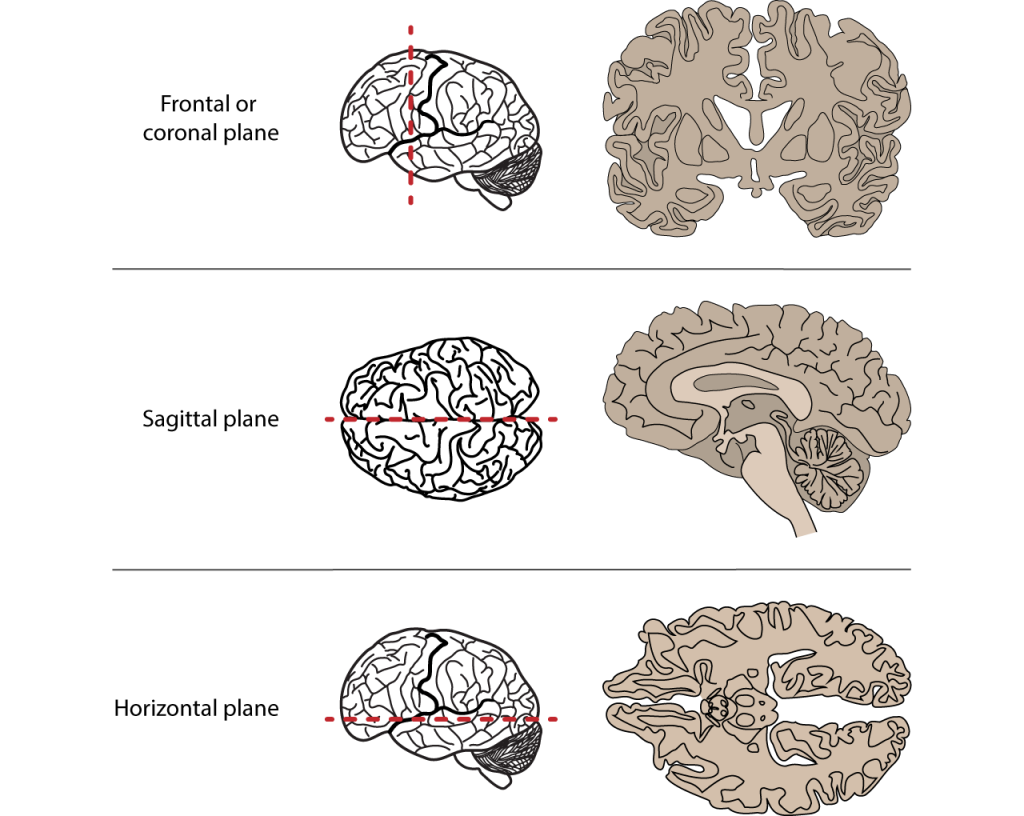

In this tutorial we will be working with NIfTI files (in ‘nii.’ or ‘nii.gz’ formats), which is the standard file format for storing MRI brain imaging data (both the image data and metadata, like voxel size, orientation etc.). It is a 3D array (X, Y, Z), where:

- X is the width (left to right in the brain)

- Y is the depth (front/back (anterior/posterior))

- and Z is the height (up/down (superior/inferior).

Let’s say that we want to take a slice of the brain at the 125th index “deep” in the brain, including its whole width and height (creating a coronal view). Figure 3. shows how we can do this, and how you should imagine this in the 3D plane.

![Figure 3. Visual representation about the different axes of the 3D arrays of NIfTI files. The axes are denoted in black. When we call these different slices we use a format like this: data [X, Y, Z]. If we want to include the whole width and height, we can just leave a comma at the place of X and Z. So, the red section represents the 125th slice along the Y axis (which is the axis of anterior-to-posterior), including its whole width and whole height (so represented as: [, 125 ,])](../../assets/img/neuroimaging/3D_Brain_Axes.png)

For future reference, the different axes of the brain are also visualized in the figure below. It’s good to have this on hand when working with neuroimaging.

Description of the ARC dataset

For this tutorial we will be using the Aphasia Recovery Cohort (ARC) Dataset, which is a collection of data from multiple studies conducted over several years. This is a large, open-access neuroimaging dataset, that contains longitudinal data from 230 individuals with post-stroke aphasia. The dataset includes multimodal MRI data (T1, T2, FLAIR, fMRI, DWI), detailed behavioral assessments (via the Western Aphasia Battery (WAB)) and demographic information. The dataset contains scanning sessions across different time points, ranging from days to years post-stroke.

Enrollment requirements included individuals who had experienced a left-hemisphere stroke at least 6 months (or 12 months for some studies) prior to enrollment, between the ages of 21 and 80 years old, with no contraindications to MRI or additional neurological impairments (such as multiple sclerosis, Parkinson’s, dementia, etc). It is important to note that many individuals participated in multiple studies.

If you would like to learn more about a specific aspect of the study, see the link taking you to the publication.

However, the scans that are included in the ARC dataset are raw MRI scans. This means that the scans came directly from the scanner, with no preprocessing applied. This means that the images are still in the original orientation and the position that the person’s head was during the scan. Because of this and also the variability of brains between individuals makes it difficult to compare multiple brains. Therefore, the MRI scans have to be “spatially normalized”, meaning that they have to be transformed to match a standard template (a common reference brain). Additionally, these images still include non-brain structures like the skull, which provide unnecessary data and thus interfere with our data analysis.

If you are new to neuroimaging, working with raw data can be tricky, because it often need a lot of pre-processing before it can used in analysis or visualization.

Luckily, the researchers behind the ARC dataset provided post-processed images for educational purposes that can be accessed through this link. These images include:

- normalized brain scans, where each scan has been aligned to a standard template,

- lesion masks, which highlight the damaged brain regions in each participant’s brain,

- lesion incidence maps, which show which brain regions are most affected across the whole group.

These post-processed images are what we’ll be using for the next steps of the tutorial, as they let us focus on interpreting results rather than struggling with preprocessing.

Description of the libraries and packages.

We will be using these packages:

-

oro.nifti- This package is the core R package for working with NifTI files. It writes and loads NifTI files into R as 3D or 4D arrays. It’s basic functions include:readNIfTI()andwriteNifTI() -

neurobase- This package is a part of the Neuroconductor project, which hosts a collection of R packages specifically for neuroimaging analysis, building on R’s existing ecosystem. It builds on oro.nift, and adds helpful functions for image math, masks, plotting, etc.

The earlier mentioned Neuroconductor system can also come in handy later for your future projects. If you type this in R you will be able to download and run an R script hosted on the Neuroconductor website:

source("https://neuroconductor.org/neurocLite.R")

That script defines the neuro_install() function, which can be used to

install any Neuroconductor package like this:

neuro_install("neurobase")

So, let’s get started!

As stated earlier, we will be using the post-processed images. For now let’s use the NifTi file that is called “wbsub-M2001_ses-1253x1076_T1w.nii.gz” and you will find it under ‘files’ within the project’s folder.

Here is what each part of this file name means:

-

‘sub-M2001’ corresponds to the subject ID

-

‘ses-1253x1076’ means that they likely combined scans from session 1253 and session 1076. The numbers refer to how many days after the stroke the given imaging session took place, so in this case it was 1076 and 1253 days post-stroke

-

‘T1w’ means the scan type.

Getting Started

Loading our packages and files

First you will have to install the packages and only then will you be able to load them in from the library:

install.packages("oro.nifti")

install.packages("neurobase")

install.packages("tidyverse")

library(oro.nifti) # handling NifTI images

library(neurobase) # has additional neuroimaging analysis tools

library(tidyverse)

Next we will load in our NIfTI file from patient M2001. Since we are

using one file for now, it is easier if you download it into your local

folder, where your R file is also saved (! this is important). To load

our file we will use the read NIfTI command from the oro.nifti

package. You can download the file using this

link.

t1_img_M2001 <- readNIfTI("wbsub-M2001_ses-1253x1076_T1w.nii", reorient = TRUE)

The reorient = TRUE ensures that the image is aligned properly in a

standard orientation, which is useful for consistency.

Checking image properties

Before we can do anything with pretty pictures, we have to inspect the properties of our NIfTI file. It’s good to know the physical size of the voxels, for accurate measurements of brain structures as well as understanding the orientation, so that the anatomical structures are correctly identified and the analyses are consistent across datasets.

First, we can check the number of voxels along each axis. This helps us understand the resolution and size of the 3D image. Run this chunk of code and see the output:

dim(t1_img_M2001)

## [1] 157 189 156

However, maybe it is smarter to have a comprehensive summary of the NIfTI object, including data type (each voxel’s intensity is stored as a 16-bit signed integer), dimensions, (number of voxels in X,Y and Z planes), pixel dimension (indicates how much each voxel measures) and voxel units.This provides a quick overview of the image’s metadata and structure.

t1_img_M2001

## NIfTI-1 format

## Type : nifti

## Data Type : 4 (INT16)

## Bits per Pixel : 16

## Slice Code : 0 (Unknown)

## Intent Code : 0 (None)

## Qform Code : 2 (Aligned_Anat)

## Sform Code : 2 (Aligned_Anat)

## Dimension : 157 x 189 x 156

## Pixel Dimension : 1 x 1 x 1

## Voxel Units : mm

## Time Units : sec

Next, we can also list all the metadata slots available in the NIfTI object, which allows us to see what additional information is stored and is accessible within the object. We can do this by typing this:

slotNames(t1_img_M2001)

## [1] ".Data" "sizeof_hdr" "data_type" "db_name"

## [5] "extents" "session_error" "regular" "dim_info"

## [9] "dim_" "intent_p1" "intent_p2" "intent_p3"

## [13] "intent_code" "datatype" "bitpix" "slice_start"

## [17] "pixdim" "vox_offset" "scl_slope" "scl_inter"

## [21] "slice_end" "slice_code" "xyzt_units" "cal_max"

## [25] "cal_min" "slice_duration" "toffset" "glmax"

## [29] "glmin" "descrip" "aux_file" "qform_code"

## [33] "sform_code" "quatern_b" "quatern_c" "quatern_d"

## [37] "qoffset_x" "qoffset_y" "qoffset_z" "srow_x"

## [41] "srow_y" "srow_z" "intent_name" "magic"

## [45] "extender" "reoriented"

From all of this the most important thing is that we know the dimension of the images: 157 × 189 × 156. This means that it comprises 157 voxels in the x-axis, 189 in the y-axis, and 156 in the z-axis.

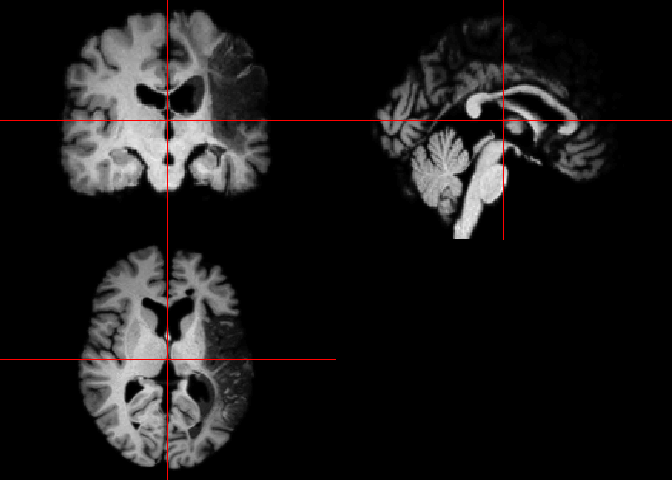

Visualizing our brain images

Now let’s look at our image. We can do this two ways. If we want a quick

visualization of our image, the orthographic() function from the

oro.nift package displays NIfTI objects in an orthogonal view, across 3

different planes (axial, sagittal and coronal). This way of looking at

our data is more neuroimaging-specific and offers a convenient way to

inspect 3D images. The picture is also very cool, and could even be

published! You will see the image under “Plots” in R. Run this code to

see the visual output we get:

orthographic(t1_img_M2001)

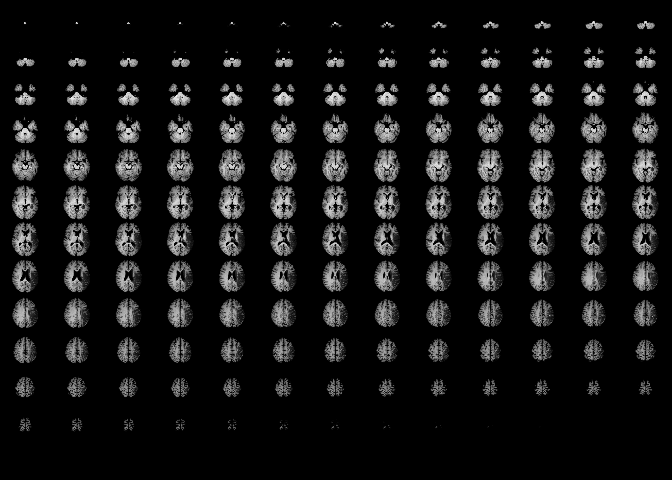

The other option is to use the image() function from the oro.nifti

package. This shows a mosaic view with all slices in a given orientation

from a brain MRI. It might take a few minutes to run the code below. Can

you recognize which view these are oriented in?

image(t1_img_M2001)

Here we can see that it portrays consecutive slices row-wise from inferior to superior. We can see that the brain has been well pre-processed. The brain structures are symmetrical and clean, and there is no visible skull tissue, indicating successful brain extraction. There is also a good contrast between the white and gray matter. Keep in mind that this simplifies the portrayal of the brain into axial slices, but in reality we have a 3D array of voxels from the MRI scan.

If we would like to extract a specific slice from the array we have to

specify its position along the 3 axes. We can do this by using the

image() function from the oro.nifti package.

This line of code displays the sagittal slice at index 125 (so we have to specify which slice we want to get along the X axis, representing the left-to-right dimension). See the description in the beginning with the 3D picture of the brain and the annotations (Figure 3). This can be especially useful for examining structures along the mid-line of the brain or assessing lateralized features.

image(t1_img_M2001[125,,])

# the commas represent that we include all indexes along X and Y axes

The X and Y axis on this picture that is produced actually translates as:

-

X axis (on output) is actually the Y plane of the brain (so left-to-right)

-

Y axis (on output) is actually the Z plane of the brain (so front-to-back)

Lesion volume calculation

Let’s say we would like to calculate the volume of the lesion this patient has.

To do this, we would need a lesion mask. A lesion mask is a binary image used in neuroimaging to identify and isolate regions of brain tissue that have been damaged due to conditions such as stroke. In this mask, each voxel (a 3D pixel) is assigned a value:

-

1 indicates the presence of a lesion

-

0 indicates healthy or unaffected tissue

This allows for the calculation of lesion volume by summing the number of voxels labeled as lesions (so 1) and multiplying by the volume of each voxel.

Lesion masks can be created from the MRI images, however it has to be done manually with external tools, like FSLeyes. Unfortunately, R does not provide built-in tools for manual lesion delineation.

Although it is very interesting to learn how to use these tools, we will be working with a lesion mask that is already provided for subject M2001. This is also available on the GitHub page with the processed images (in the ‘NIfTI’ folder, if you search for your subject with Ctrl+F there will be two files corresponding, one is the MRI scan and one is the extracted brain lesion).

We read this lesion mask, similar to how we did the MRI scan. You can download all lesion masks files we will work with from GitHub. In the same folder as your R script, create a folder called “lesion_masks”/. Then click on each file in the GitHub folder, and find the “Download raw file” button in the top right. Make sure to move the downloaded files into your lesion_masks folder. Then the following file path will work.

lesion_mask <- readNIfTI("lesion_masks/wsub-M2001_ses-1253x1076_lesion.nii", reorient = TRUE)

Then we look at a summary of this NIfTI object. We can do this by simply running the variable we stored the lesion mask in. Keep an eye out on the ‘Pixel Dimension’ as we will need to use this later to calculate the lesion volume:

lesion_mask

## NIfTI-1 format

## Type : nifti

## Data Type : 2 (UINT8)

## Bits per Pixel : 8

## Slice Code : 0 (Unknown)

## Intent Code : 0 (None)

## Qform Code : 2 (Aligned_Anat)

## Sform Code : 2 (Aligned_Anat)

## Dimension : 157 x 189 x 156

## Pixel Dimension : 1 x 1 x 1

## Voxel Units : mm

## Time Units : sec

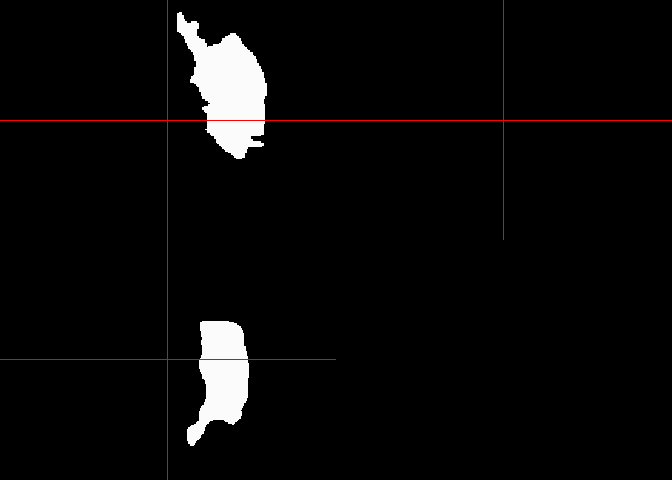

If you are curious what this looks like just run image(lesion_mask) or

orthographic(lesion_mask).

Okay now the calculating begins. First we will count the number of voxels labeled as 1 (remember that 1 indicates the presence of a lesion).

orthographic(lesion_mask)

lesion_voxels <- sum(lesion_mask == 1)

Then, we retrieve voxel dimensions (in mm) via the pixdim() function.

The array in the NIfTI header contains scaling information for each

dimension of the image data (you can get this information by running

lesion_mask@pixdim).

(The output if we run lesion_mask\@pixdim is : -1 1 1 1 0 0 0 0)

-

The first value indicates the orientation of the image axes. For this example it would be -1, which suggests left-handed coordinate system (while 1 would indicate a right-handed system).

-

The second, third and fourth value in this array indicates the voxel dimensions along the x, y, and z axes, respectively. In our case these are all 1.

-

The rest of the values are usually used for other dimensions like time. In our case they are 0, indicating that they are not used in our dataset.

So we are only interested in the second to fourth value from the ‘pixdim’ array, meaning we will have to filter these out with the use of the square-brackets.

voxel_dims <- pixdim(lesion_mask)[2:4]

Then we calculate the volume of a single voxel in mm³. We will be doing

this via the prod() function, which is part of R’s base package.

voxel_volume_mm3 <- prod(voxel_dims)

Now we calculate the product of the number of voxels (lesion_voxels)

and the volume of a single voxel (voxel_volume_mm3) to get the total

lesion volume.

lesion_volume_mm3 <- lesion_voxels * voxel_volume_mm3

Now we can have a nice output of our result by using the cat() base R

function, which concatenates and outputs its arguments:

cat("Lesion Volume:", lesion_volume_mm3, "mm³")

## Lesion Volume: 201832 mm³

There is a table also linked to this dataset that contains participants’ information such as sex, age at stroke, race, the days after the stroke when participant took the WAB (Western Aphasia Battery) test, the Aphasia Quotient (AQ) from this test (which is a score out of 100, measuring language function in people with brain injury (like stroke) - see Table 1. on how to interpret the scores for the AQ part of the WAB) and the type of aphasia.

Table 1. WAB-AQ scores

| AQ Score | Severity |

|---|---|

| 0-25 | Very severe |

| 26-50 | Severe |

| 51-75 | Moderate |

| 76+ | Mild |

Note: The information for this table was taken from: Western Aphasia Battery (WAB) – Strokengine

We can load the table containing all this information by directly from

GitHub with the following code. Hit View(df) to see the data frame:

df <- read_tsv("https://github.com/ucrdatacenter/projects/raw/refs/heads/main/misc/neuroimaging_tutorial/participants.tsv")

View(df)

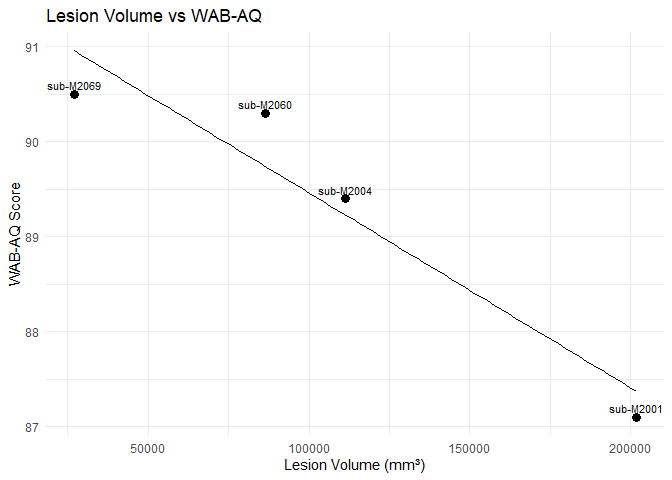

Let’s put to use what we learnt. Let’s say we want to see how lesion size and WAB-AQ scores correlate. We can do this by following these steps:

Let’s pick subjects M2001, M2004, M2060 and M2069 who all have the same type of aphasia (anemic, which is a type of aphasia where patients find it hard to find words, but have near-normal speech).

Let’s make a function to calculate the lesion volume using what we did for M2001. We can do this by:

calculate_lesion_volume <- function(mask_path) {

lesion_mask <- readNIfTI(mask_path, reorient = TRUE)

lesion_voxels <- sum(lesion_mask == 1)

voxel_dims <- pixdim(lesion_mask)[2:4]

voxel_volume_mm3 <- prod(voxel_dims)

lesion_voxels * voxel_volume_mm3

}

How this works is the following:

-

the function is called:

calculate_lesion_volume -

this function will take a variable that is the path to the lesion mask:

function(mask_path) -

the following are just copied from what we did earlier:

lesion_mask <- readNIfTI(mask_path, reorient = TRUE)

lesion_voxels <- sum(lesion_mask == 1)

voxel_dims <- pixdim(lesion_mask)[2:4]

voxel_volume_mm3 <- prod(voxel_dims)

lesion_volume <- lesion_voxels * voxel_volume_mm3

Now we will use these functions to compute lesion volume per subject. We call function for each subject’s lesion mask files (M2001, M2004, M2060, and M2069). This will return a number (volume in mm³ for each participant), which will be stored in named variables (lesion_volume_2001, lesion_volume_2004 etc. - see on the right under ‘Values’):

lesion_volume_M2001 <- calculate_lesion_volume("lesion_masks/wsub-M2001_ses-1253x1076_lesion.nii")

lesion_volume_M2004 <- calculate_lesion_volume("lesion_masks/wsub-M2006_ses-2381x1773_lesion.nii")

lesion_volume_M2060 <- calculate_lesion_volume("lesion_masks/wsub-M2060_ses-220_lesion.nii")

lesion_volume_M2069 <- calculate_lesion_volume("lesion_masks/wsub-M2069_ses-5818_lesion.nii")

Now we will use the table with the participant information.

We add the lesion volume variable to this table, but first we create a data frame where we store the participant id with the matching lesion volume. We create the new table like this:

lesion_volumes <- tibble(

participant_id =c("sub-M2001", "sub-M2004", "sub-M2060", "sub-M2069"),

lesion_volume_mm3 = c(lesion_volume_M2001, lesion_volume_M2004, lesion_volume_M2060, lesion_volume_M2069)

)

Now we can merge this table with the bigger participants table (df) to

do analysis. We do so by using the inner_join() function, which merges

rows from the df and lesion_volumes table only where participant_id

matches in both:

merged_data <- inner_join(df, lesion_volumes, by = "participant_id")

Finally, we can do our analysis. Let’s do a correlation analysis to see

whether higher WAB-AQ scores correlate positively to higher lesion

volumes. The cor.test() function performs a Pearson’s correlation

test, using the lesion volume and WAB-AQ scores as arguments. This

outputs the correlation coefficient, p-value and other statistics.

cor.test(merged_data$lesion_volume_mm3, as.numeric(merged_data$wab_aq))

##

## Pearson's product-moment correlation

##

## data: merged_data$lesion_volume_mm3 and as.numeric(merged_data$wab_aq)

## t = -4.6195, df = 2, p-value = 0.04381

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## -0.9991117 0.0603890

## sample estimates:

## cor

## -0.9561948

So the correlation is a negative value (-0.5635701), indicating a trend where larger lesion volume indicates lower WAB-AQ scores. The p-value is > 0.05 (it is 0.4364), meaning that this is not statistically significant. However, we have a very small sample size (4 participants) so that can explain why our results are so insignificant.

However, it would be nice to visualize this correlation. Let’s create a scatter plot, using ggplot, with the lesion volume on the X-axis, and the WAB-AQ score on the Y-axis.

To add a regression line we use geom_smooth, where we set method = “lm” to specify the linear model, and additionally set se=FALSE, to hide the shaded confidence interval around the line (optional).

ggplot(merged_data, aes(x = lesion_volume_mm3, y = wab_aq, label = participant_id)) +

geom_point(size = 3, color="black") +

geom_smooth(method = "lm", se = FALSE, color = "black", size=0.6) +

geom_text(vjust = -0.5, size = 3) + # participant labels

labs(

title = "Lesion Volume vs WAB-AQ",

x = "Lesion Volume (mm³)",

y = "WAB-AQ Score"

) +

theme_minimal()

We can see a trend that could be negative correlation between higher WAB-AQ scores and larger lesion volumes. Is this a result you expected? You can also explore different aphasia types and see whether this correlation is also present in those.

Hope you enjoyed this tutorial and you feel more inspired to explore its potential more in R.

Some other useful resources if you are interested in neuroimaging:

-

The Neuroconductor webpage has three online-courses: Courses | Neuroconductor. I highly recommend the one called “Imaging in R”.

-

There are two videos from Elizabeth Sweeney that give a very nice insight into what is possible with Neuroimaging in R. I recommend checking it out if you are interested:

-

Elizabeth Sweeney - Neuroimaging Analysis in R (16:45 minutes)

-

Neuroimaging Analysis in R: Image Preprocessing (51:12 minutes)

-

References

Azhar, S., & Chong, L. R. (2023). Clinician’s guide to the basic principles of MRI. Postgraduate Medical Journal, 99(1174), 894–903. https://doi.org/10.1136/pmj-2022-141998

Magnetic resonance imaging—PMC. (n.d.). Retrieved 14 May 2025, from https://pmc.ncbi.nlm.nih.gov/articles/PMC1121941/

Rodriguez-Hernandez A, Babici D, Campbell M, Carranza-Reneteria O, Hammond T. Hypoglycemic hemineglect a stroke mimic. eNeurologicalSci. 2023 Jan 17;30:100444.

Elizabeth Sweeney - Neuroimaging Analysis in R [Internet]. 2019 [cited 2025 Apr 18]. Available from: https://www.youtube.com/watch?v=9HkEq01nrco

Neuroimaging Analysis in R: Image Preprocessing—YouTube. (n.d.). Retrieved 2 May 2025, from https://www.youtube.com/watch?v=6tDbdNTwEuA&t=2288s

APROCSA dataset. (n.d.). Retrieved 2 May 2025, from https://langneurosci.org/aprocsa-dataset/

Courses | Neuroconductor. (n.d.). Retrieved 2 May 2025, from https://neuroconductor.org/courses

John Muschelli—About. (n.d.). Retrieved 2 May 2025, from https://johnmuschelli.com/